Best practices for transparency

in machine-generated personalization

Laura Schelenz, Avi Segal, Kobi Gal

The Problem:

- Personalization is widely used in AI-based systems to improve users’ experience.

- However, users are becoming increasingly vulnerable to online manipulation due to opaque personalization.

- Such manipulation decreases users’ levels of trust, autonomy, and satisfaction.

- Increasing transparency is an important goal for personalization based systems.

- Unfortunately, system designers lack guidance on how to increase transparency in their systems.

Transparency Definition:

(Based on a review of ethics literature)

A practice of system design that centers on the disclosure of information to users, whereas this information should be understandable to the respective user and provide insights about the system. Specifically, the information disclosed should enable the user to understand why and how the system may produce or why and how it has produced a certain outcome (e.g. why a user received a certain personalized recommendation).

Best Practices and Checklist for Transparency:

- System designers can consult the best practices below to evaluate transparency in their system.

- To simplify their work, we have developed this checklist (23 questions) which they can fill.

- They can then make adjustments to their system based on their checklist replies.

- We help system designers understand where – in the system architecture – they are strong on transparency and where improvements are needed.

| Architecture component | Best practices |

| Input |

|

| Processing |

|

| Output |

|

| User Control |

|

User control: Enabling users to interact with the system to adjust elements based on their respective needs and preferences.

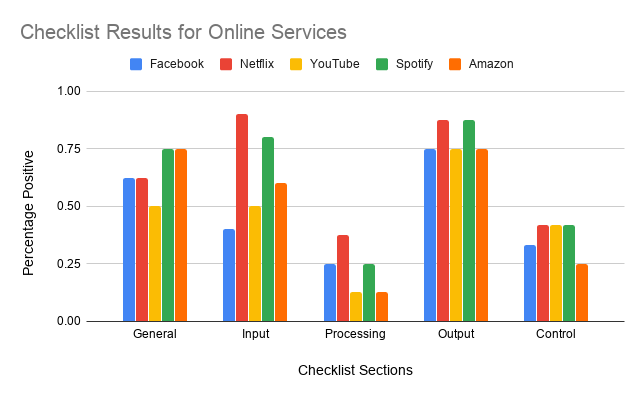

Case Study:

- Applying the checklist to several online services as a reflective and assessment tool.

- Taking a system designer’s point of view, using authors’ accounts in these systems.

- Checked information and options available to registered users.

- Y axis: the percentage of positive and partial replies in each checklist section.

Conclusion and Future Research:

Take Aways:

- Developed a Transparency Definition, Best Practices and Checklist for system designers.

- Applied checklist to several online services.

- Current systems lack transparency especially with regard to “Processing” and “User Control”.

Limits of Transparency:

- No guarantee that designers and users really understand models: lack of resources, human capital, digital and technical literacy.

- Information can obscure details, confusion of users.

- Transparency vs. privacy of users/designers/companies.

- Balancing transparency and economic interests.

Need for Personalized Transparency:

- What is transparent to some, may not be transparent to others.

- Configure information to user needs and capabilities.

- How does other users’ behavior affect a particular user?

Links:

Selected literature:

- Brent Daniel Mittelstadt, Patrick Allo, Mariarosaria Taddeo, SandraWachter, and Luciano Floridi. 2016.

The Ethics of Algorithms: Mapping the Debate. Big Data & Society 3, 2 (2016), https://doi.org/10.1177/2053951716679679 - Segal, Avi, Kobi Gal, Ece Kamar, Eric Horvitz, and Grant Miller. Optimizing interventions via offline policy

evaluation: Studies in citizen science. In Thirty-Second AAAI Conference on Artificial Intelligence. 2018. - Jenna Burrell. 2016. How the Machine ‘Thinks’: Understanding Opacity in Machine Learning Algorithms.

Big Data & Society 3, 1 (2016), https://doi.org/10.1177/2053951715622512 - High-Level Expert Group on Artificial Intelligence. [n.d.]. Ethics Guidelines for Trustworthy AI.

https://ec.europa.eu/digital-single-market/en/news/ethicsguidelines-trustworthy-ai - Matteo Turilli and Luciano Floridi. 2009. The Ethics of Information Transparency. Ethics and Information

Technology 11, 2 (2009), 105–112. https://doi.org/10.1007/s10676-009-9187-9 - Mike Ananny and Kate Crawford. 2018. Seeing Without Knowing: Limitations of the Transparency Ideal and its

Application to Algorithmic Accountability. New Media & Society 20, 3 (2018), 973–989, https://doi.org/10.1177/1461444816676645 - Segal, Avi, et al. Intervention strategies for increasing engagement in crowdsourcing: platform, predictions,

and experiments. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence. 2016.