Interactions between

Inter-and Intra-Individual Differences

in Eye Movements

Christiane B. Wiebel-Herboth, Matti Krüger, Martina Hasenjäger

Honda Research Institute Europe GmbH

Personalizing gaze-based applications can have various advantages, from better overall performance to higher acceptance by users. However, this presumably only holds true if observer models are robust enough to deviations that are introduced by changes in context. One possible change in context is a change in observer state. To successfully adapt gaze- based application models it is important to understand how such intra-individual differences interact with individual differences in eye movement behavior. Here, we present first results from a visual comparison task, investigating this topic.

Background

Previous work has shown that eye movements vary as a function of individuals [1,2,3] but also as a function of visual stimuli, task and the observer’s cognitive state [4,5]. It has been shown that adapting to individual user models can make a significant impact in gaze-based applications [6,7]. A seamless transition between different contexts would be an ultimate goal of using gaze patterns for user modeling and personalization in practical applications. Therefore an important question is how inter- and intra-individual differences in eye movements interact.

Methods

We tackle this question in a pilot experiment exploring eye movement behavior in a visual comparison task under two different experimental conditions that aim at inducing different observer states: a time unconstrained (relaxed) condition (TUC) and a time-constrained (stressful) condition (TC). To evaluate inter- and intra-individual differences in eye movement behavior we analyze two different measures: mean fixation duration as well as scan path data.

Participants: Data from 10 out of 12 observers were valid and included in the data analysis (ME age = 38, [22:53])

Apparatus: Gaze data were recorded using a head-mounted pupil labs eye tracker (120 fps, 60 fps world camera) [8]. Stimuli were shown on a regular computer screen in a normal office environment.

Stimuli & Procedure: 44 photographs of varying indoor and outdoor scenes [9] were shown. The mage data set was split in half for the two experimental conditions. Difficulty was assessed and counterbalanced between the two samples.

Images from Shuffle database (Large Change Images) [9].

Analysis & Results

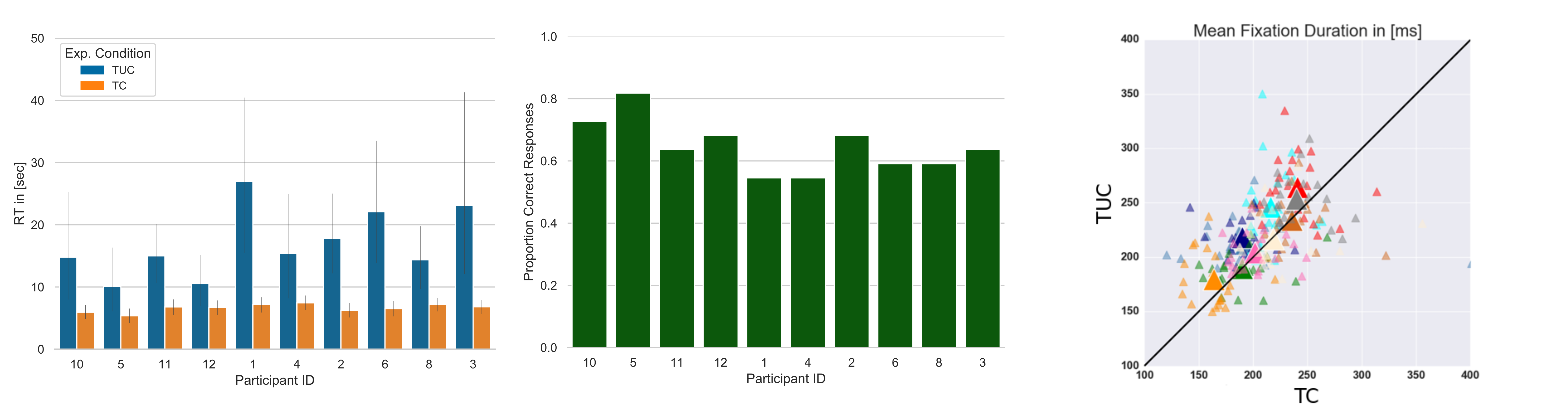

Task Performance

All participants reported that they felt the time pressure in the TC condition which was also reflected in an reduced search accuracy: on average 65% (TC) vs. 100% (TUC). Mean RT for correct trials was m = 4.84 sec (sem = 0 .15) for the TC conditions and m = 17.01 sec (sem = 1.5) for the TUC condition.

Mean Fixation Duration

Fixations were computed using the IDT algorithm [10] using a maximum dispersion of 50 px, a minimum duration of 100 ms and a maximum duration of 1500ms. To test the effect of experimental condition (TUC vs. TC) and idiosyncratic differences between participants, we analyzed mean fixation durations using a linear mixed effects analysis.

- Significant effect of the experimental condition( χ2(1) = 7 .93, p < 0.05)

- Significant differences between participants across the experimental conditions ( χ2(3) = 1376, p < 0.001)

- Effect of experimental condition was significantly different across participants (Interaction) ( χ2(2) = 37 .53, p < 0.001

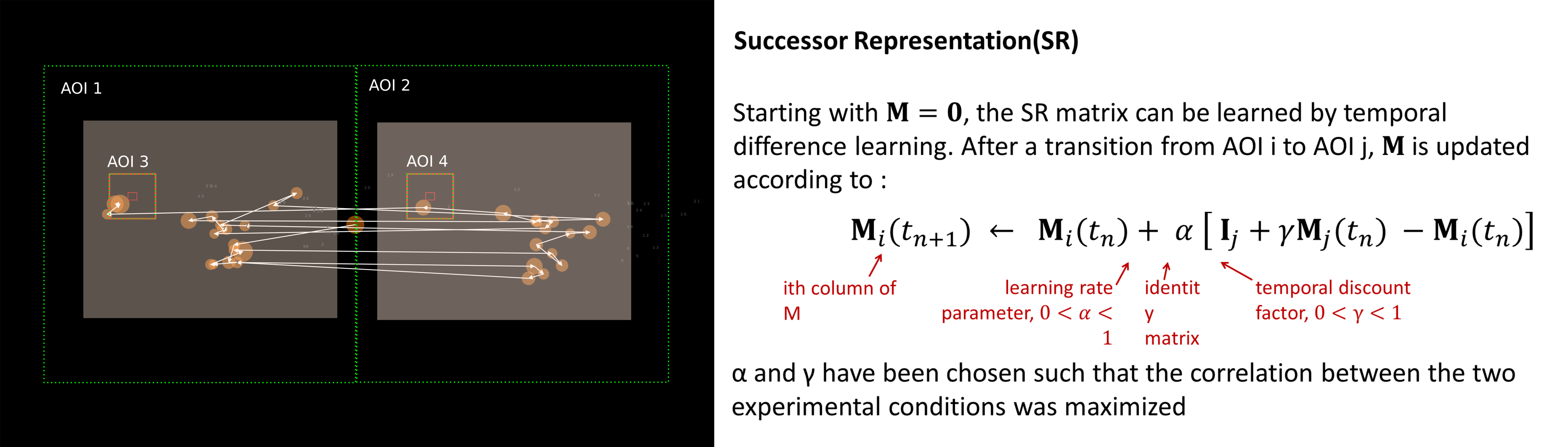

Scan Path Analysis

Scan patterns represent spatial as well as temporal information.Traditionally, they are modeled as a time series of fixations in areas of interest (AOI). It has recently been claimed, that there is a lack of methods incorporating both spatial and temporal information of gaze data [11]. Therefore, we choose two models for comparison that differ in their capability to process the temporal aspect of the scan path data:

- Transition matrix model (TM)

- Successor representation model (SR) [12, 13].

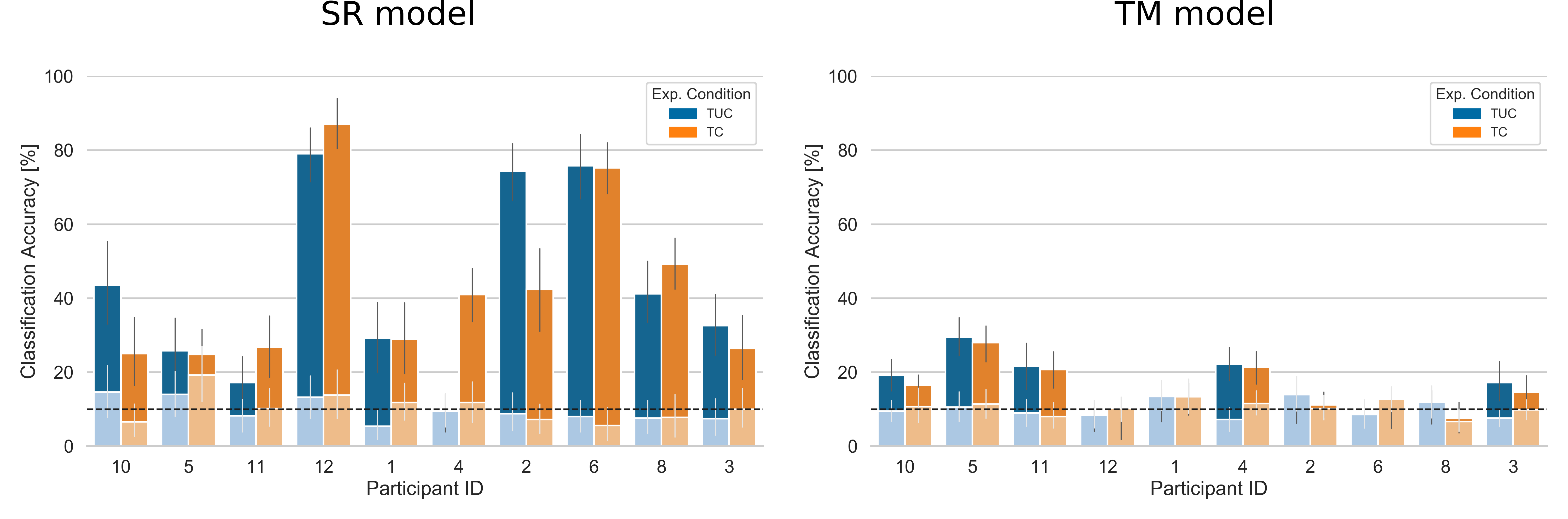

Classification Analysis

If individual differences in scan patterns were better captured by the SR model compared to the TM model this would suggest an important role of the prolonged temporal information incorporated in the SR model representation.

Scan path representations (SR model output vs. TM model output) served as input to a classification analysis that aimed at classifying single participants. We used a Random Forest classifier as state-of-the-art classification model and compared its performance with a classifier that predicted participant IDs uniformly at random. The reported performance scores are averages over 5 times repeated 5-fold stratified cross validation.

Average classification accuracy was lower in the TM model compared to the SR model – main effect model (F(1) = 14.674, p < 0 .05).

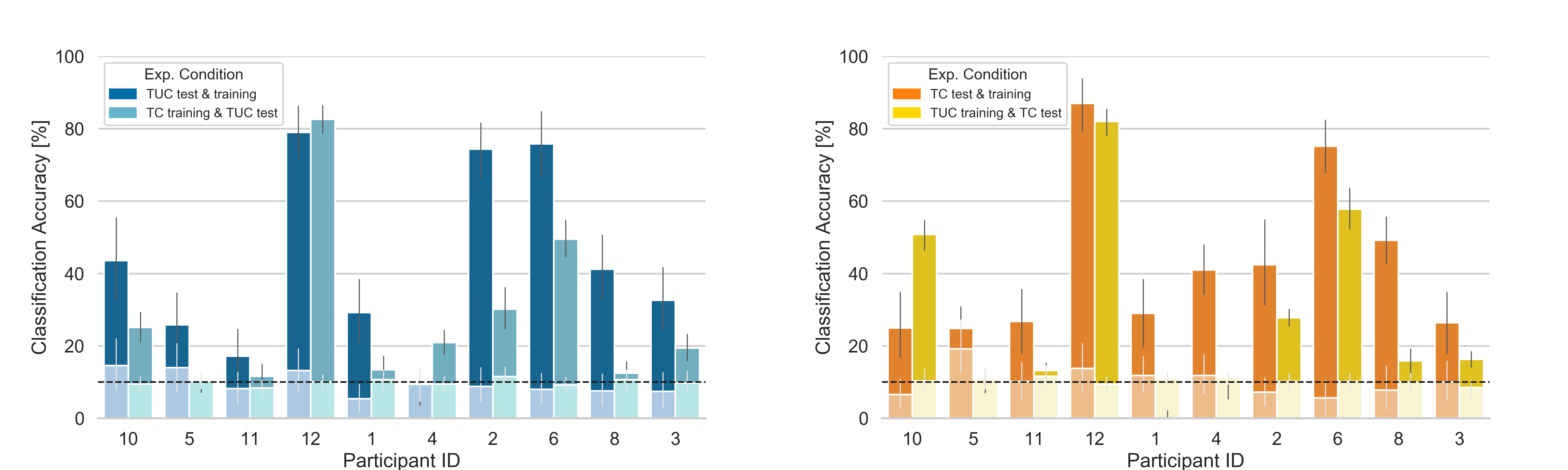

Transfer Classification

If the inter-individual differences between observers would be robust across the experimental conditions, we would expect to yield a similar classification result despite training the model on the incongruent data set. If, however, the inter-individual differences would be significantly affected by the different internal states we would expect the classification accuracy to drop.

We ran the same classification analysis but with different test and training data (congruent vs. incongruent).

Classification performance dropped on average in the incongruent test and training condition – main effect congruency: (F(1) = 7 .632, p<0 .05). However, most participants were still classified above chance.

Summary & Conclusions

- We found a significant interaction between individual differences in mean fixation durations and the experimental conditions (TC vs. TUC), indicating that the change in internal state affected participants to different degrees.

- Overall the SR model [12,13] performed significantly better than the TM model, suggesting that prolonged temporal information in scan path data might add another quality to the data for robustly classifying individual observers.

- We show that based on the SR model [12,13], it was possible to extract inter-individual variance across contextual changes to some extent.

- Our results can give valuable insights in how to model gaze data for personalized application scenarios under varying conditions in a robust way which should be further investigated in a larger context.

References

[1] Timothy J. Andrews and David M. Coppola. 1999. Idiosyncratic Characteristics of Saccadic Eye Movements When Viewing Different Visual Environments. Vision Research 39, 17 (1999), 2947–2953.

[2] Adrien Baranes, Pierre-Yves Oudeyer, and Jacqueline Gottlieb. 2015. Eye Movements Reveal Epistemic Curiosity in Human Observers. Vision Research 117 (2015), 81–90.

[3] Taylor R. Hayes and John M. Henderson. 2017. Scan Patterns During Real-World Scene Viewing Predict Individual Differences in Cognitive Capacity. Journal of Vision 17, 5 (2017), 23, 1–17.

[4] Oya Celiktutan and Yiannis Demiris. 2018. Inferring Human Knowledgeability from Eye Gaze in Mobile Learning Environments. In Proceedings of the European Conference on Computer Vision (ECCV). 0–0.

[5] Leandro L Di Stasi, Carolina Diaz-Piedra, Héctor Rieiro, José M Sánchez Carrión,Mercedes Martin Berrido, Gonzalo Olivares, and Andrés Catena. 2016. Gaze Entropy Refl ects Surgical Task Load. Surgical endoscopy 30, 11 (2016), 5034–5043.

[6] Elena Arabadzhiyska, Okan Tarhan Tursun, Karol Myszkowski, Hans-Peter Seidel, and Piotr Didyk. 2017. Saccade Landing Position Prediction for Gaze-Contingent Rendering. ACM Transactions on Graphics (TOG) 36, 4 (2017), 50.

[7] Aoqi Li and Zhenzhong Chen. 2018. Personalized Visual Saliency: Individuality Affects Image Perception. IEEE Access 6 (2018), 16099–16109.

[8] Kassner, M., Patera, W., & Bulling, A. (2014, September). Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. In Proceedings of the 2014 ACM international joint conference on pervasive and ubiquitous computing: Adjunct publication (pp. 1151-1160).

[9] Preeti Sareen, Krista A. Ehinger, and Jeremy M. Wolfe. 2016. CB Database: A Change Blindness Database for Objects in Natural Indoor Scenes. Behavior research methods 48, 4 (2016), 1343–1348.

[10] Dario D. Salvucci and Joseph H. Goldberg. 2000. Identifying Fixations and Saccades in Eye-Tracking Protocols. In ETRA ’00: Proceedings of the 2000 Symposium on Eye Tracking Research & Applications. ACM, New York, NY, USA, 71–78.

[11] Michał Król and Magdalena Ewa Król. 2019. A Novel Eye Movement Data Trans-formation Technique that Preserves Temporal Information: A Demonstration in a Face Processing Task. Sensors 19, 10 (2019), 2377.

[12] Taylor R. Hayes and John M. Henderson. 2017. Scan Patterns During Real-World Viewing Predict Individual Differences in Cognitive Capacity. Journal of Vision 17, 5 (2017), 23, 1–17.

[13] Taylor R. Hayes, Alexander A. Petrov, and Per B. Sederberg. 2011. A Novel Method for Analyzing Sequential Eye Movements Reveals Strategic Influence on Raven’s Advanced Progressive Matrices. Journal of Vision 11, 10 (2011), 10.